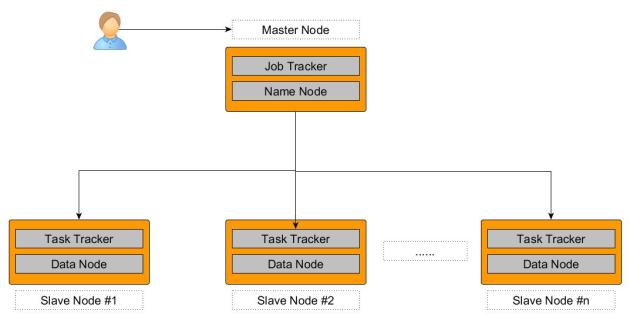

Hadoop 2.x Architecture

February 13, 2017 1 Comment

Addressing the limitations of the hadoop1.x, hadoop2.x is designed in a generic way so as to accommodate more than just Hadoop Mapreduce (MR V1) so that the hadoop cluster can also be used for spark, kafka, storm, and other distributed frameworks. Hadoop2.x is also referred to as as YARN – Yet Another Resource Negotiator OR MapReduce V2.

Drawing parallel between Hadoop1.x and Hadoop2.x

- Job tracker is split into Resource Manager and Application Master

- Task Tracker is split into Node Manager and Containers

Components of MR V2

- Resource Manager (one per cluster): It does the job of pure scheduling, i.e, identifying the resources across the cluster and assigning them to the competing applications. It has two modules in it.

- Scheduler: This is pluggable and we can change it based on requirement like capacity scheduler or fairness scheduler etc

- Application Masters (plural): This is responsible for restarting the Application Master in case of its failure.

Note: Resource Manager failure itself can be handled with ZooKeeper.

- Application Master (one per application/job): This is responsible to negotiating resources with the Resource Manager and once it gets the resources (containers inside slave nodes), it talks to the Node Managers and get the tasks executed by tracking their status and progress. The major benefits this component brings to the architecture

- Scalability: By splitting the resource management/scheduling from application life cycle management, the cluster can be scaled much more than MR V1.

- Generic Architecture: By moving all the application specific runtime complexities into the Application Master, we will be able to launch any type of frameworks like Kafka, Storm, Spark, etc

Application Master also supports very generic resource model, like it can request very specific resources like amount of RAM requried, number of cores, etc

- Node Manager (one per Slave Node): This is responsible for launching containers inside the node with the amount of resources as specified by Application Master.YARN allows applications (AM) to launch any process (unlike only java in MR V1) with

- command to launch as process within container

- environment variables required for the process

- local resources required prior to launch on that node (like any 3rd party jars etc)

security tokens (if any)

- Container (one/many per node): This is the lowest level slave node component used to process the data.

Hadoop 2.x/YARN/MR V2 Architecture

Application Flow

#1: Client submits job to the cluster which is received by the Resource Manager

#2: Resource Manager (RM) launches the Application Master in one of the available containers (here container #C5)

#3: On Application Master (AM) bootup, it registers itself with RM and then requests for the resources to run the job on cluster

#4: RM responds to AM with 2 containers #C1 and #C8

#5: AM then asks Node Managers (NM) of #C1 and #C8 to launch the containers

#6: Containers while running the job report back to the AM

#7: While all this process is going on Client has direct communication with AM to get the status of the job

##: Once the job is complete, AM unregisters itself from the RM and thus making the container available for other jobs

Recent Comments